Data Acquisition

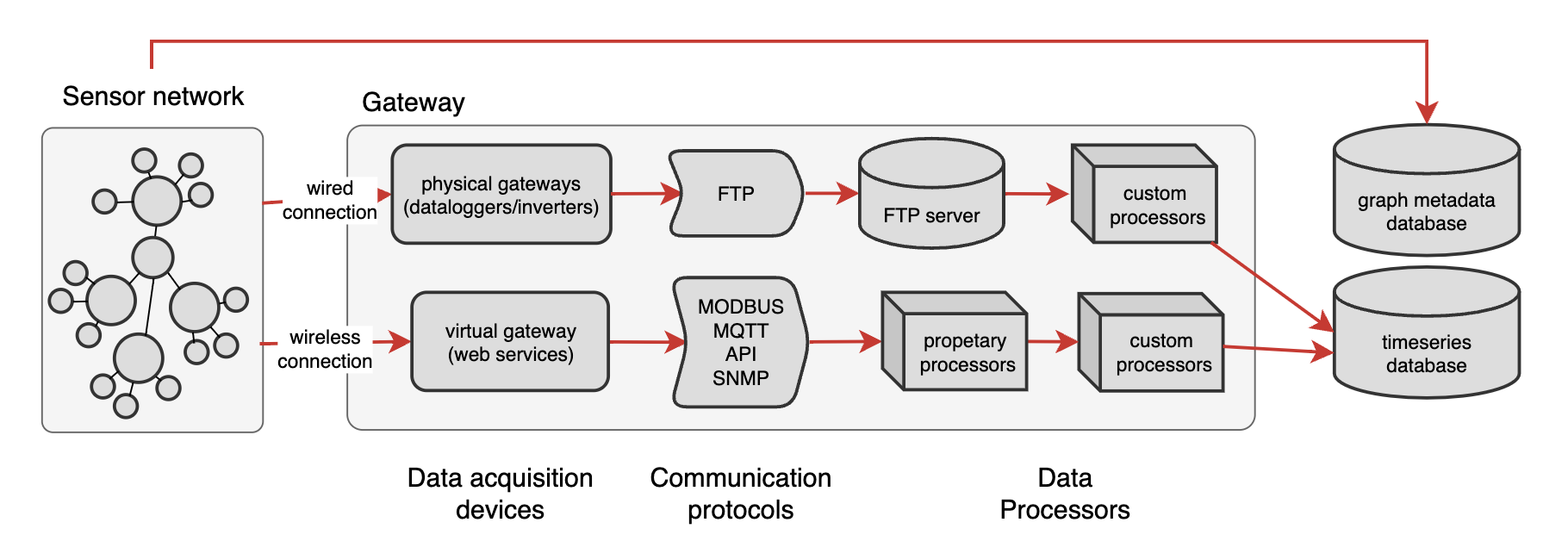

The data acquisition step is intended as the process through which data are collected from the energy systems and equipment systems previously described, by means of the installed monitoring infrastructure. This step also encompasses the proper processing and storage of acquired data to ensure its availability for further exploitation in the application layer. As illustrated in Figure \ref{fig:data-acquisition} the data acquisition process can be carried out through the definition and development of an appropriate gateway. A gateway is intended as an intermediary between the sensing networks (i.e. raw data) and a high level data structure (i.e. databases and cloud services) [@gloria2017design; @beniwal2022systematic]. This gateway is responsible for acquiring and transforming data from raw sensor signals into processed data suitable for database storage. The nature of the gateway is specific to the sensor network and can either be a physical gateway, such as a data logger that converts analog and digital inputs into an appropriate form, or a virtual gateway, such as proprietary software that internally converts raw data and exposes it via a wired LAN connection. Depending on the type of gateway and sensor, data transmission can occur through various communication protocols and must then be adequately processed. This section will detail each step of the acquisition pipeline.

Sensors network

The history of the monitoring infrastructure at PoliTo is layered and has evolved progressively over the years, resulting in a diverse sensor network composed of devices with varying accuracy, technologies, and manufacturers. In the case study analyzed here, this heterogeneous mix reflects decades of incremental installation and upgrades. Since 2014, the number of installed sensors has grown steadily, coinciding with the renovation and metering of an increasing number of campus facilities. At the time of writing, the total number of installed and operational sensors is 411. It is important to note that this figure represents the sensors that have been systematically surveyed as part of this work, conducted through a comprehensive census that was performed in the scope of this thesis with the support of the PoliTo technical team. This survey was aimed at obtaining a detailed and organized inventory to improve maintenance planning and facilitate the integration of the monitoring infrastructure. Looking ahead, PoliTo’s administration envisions expanding the sensing infrastructure with the goal is to coordinate and harmonize the monitoring efforts of individual research groups, creating a cohesive, vendor-agnostic, and open-source platform for data acquisition and management—such as the one described in this thesis.

The number of installed sensors per type are represented in Table~\ref{tab:sensors-installed}. As can be seen the 65% of the installed sensors are the active power sensor which objective is to measure the active power of a certain electrical equipment (e.g., transformer, photovoltaic production, lighting etc.). This kind of sensors are fundamental for the analysis carried out in this thesis since allows to perform energy balances at different levels: from meter level to sub-meter and component level. Further info about the hierarchy of such sensors will be provided in the data modeling section. Some of the installed Power sensors allow to measure also the reactive power (~3%) and power factor (~6%). Those two measurements allow to constantly monitor the capacity loads in particular of the equipment in medium voltage.

| Sensor type | Number | Percentage |

|---|---|---|

| Active Power Sensor | 286 | 65% |

| Temperature Sensor | 29 | 7% |

| Power Factor Sensor | 27 | 6% |

| Water Flow Sensor | 17 | 4% |

| Reactive Power Sensor | 15 | 3% |

| Differential Pressure Sensor | 10 | 2% |

| Solar Irradiance Sensor | 7 | 2% |

| Current Sensor | 7 | 2% |

| CO₂ Sensor | 7 | 2% |

| Relative Humidity Sensor | 3 | 1% |

| Thermal Power Sensor | 3 | 1% |

| Total | 411 | 100% |

Other sensors like the current and radiance sensors were installed to monitor the efficiency and energy production of the photovoltaic plants previously described. Finally there are some sensors like the CO₂ and temperature sensors that were installed in classrooms and offices to monitor the indoor air quality and how the HVAC systems are able to maintain acceptable thermo-hygrometer condition in the occupied zones of the campus. Finally, there are some other sensors that measure some specific quantity related to HVAC and chiller components, for example the thermal power sensors that measure the thermal power of heating and cooling coils and the differential pressure sensors that measure the pressures in the ducts and filters, allowing a proactive maintenance of such equipment.

Gateway

The gateway is the core of the data acquisition pipeline, serving as a critical bridge between IoT devices or sensors and the databases [@gloria2017design]. It plays a key role in data collection, device connectivity, and intelligent data processing. Gateways enable the connection of various devices, such as sensors that may not be natively designed for internet connectivity, thereby facilitating the integration of traditional systems with modern IoT networks [@beniwal2022systematic]. These gateways perform multiple functions, including and not only (i) data aggregation from sensors, (ii) translation of different communication protocols, (iii) data pre-processing, (iv) and secure communication to and from the cloud or a central server. As described in Figure~\ref{fig:data-acquisition}, the gateway structure primarily consists of data acquisition hardware, which can be either physical or virtual, and a processing pipeline that collects data through different protocols, properly formats it, and sends it to the database through a direct connection. In this section the main gateways in the PoliTo infrastructure will be presented with a particular focus on the communication protocols, data processing and the reasoning behind the setup of the data acquisition pipeline.

Data-loggers

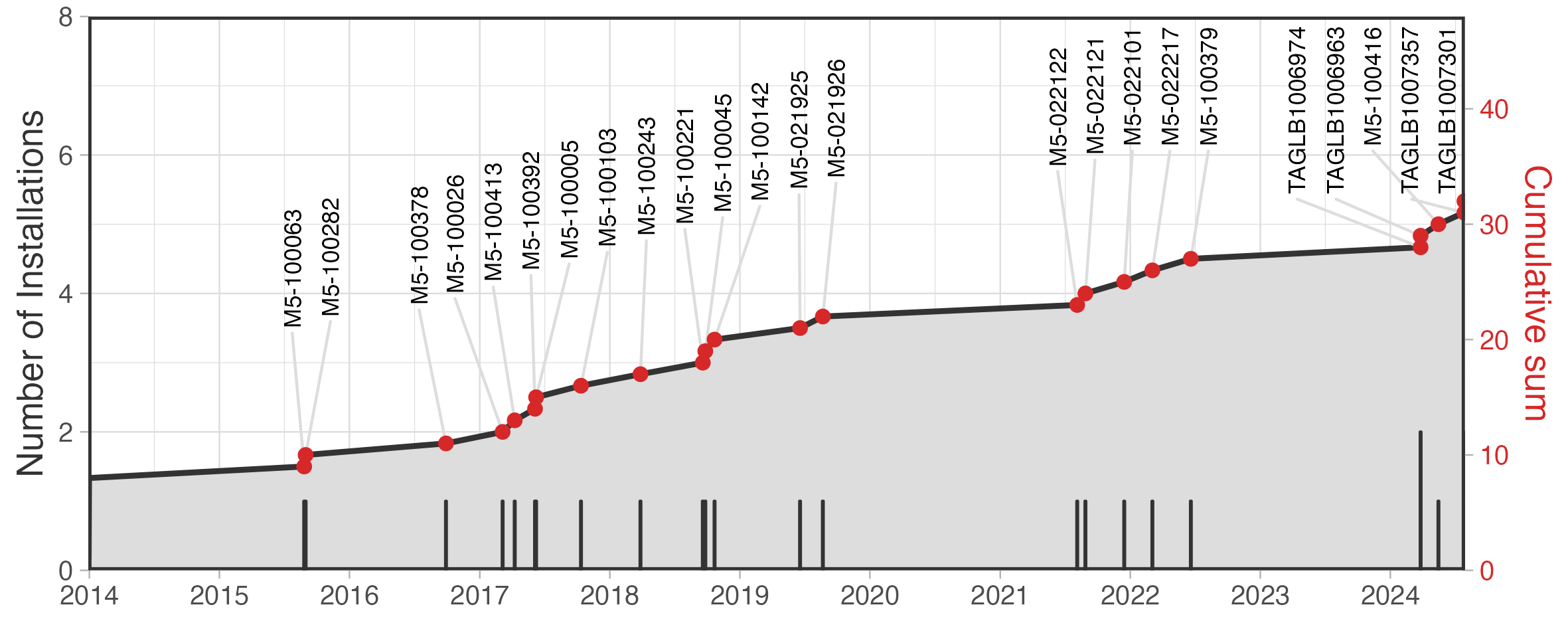

Most of the PoliTo monitoring infrastructure relies on the installation of physical gateways identified as data-loggers. A data logger is an electronic device that records data over time, either with a built-in or external analog or digital instruments and sensors. Specifically, at the time of writing, 32 data-loggers are installed and Figure~\ref{fig:datalogger-installation-timeline} shows the total number of installation from 2014 to now.

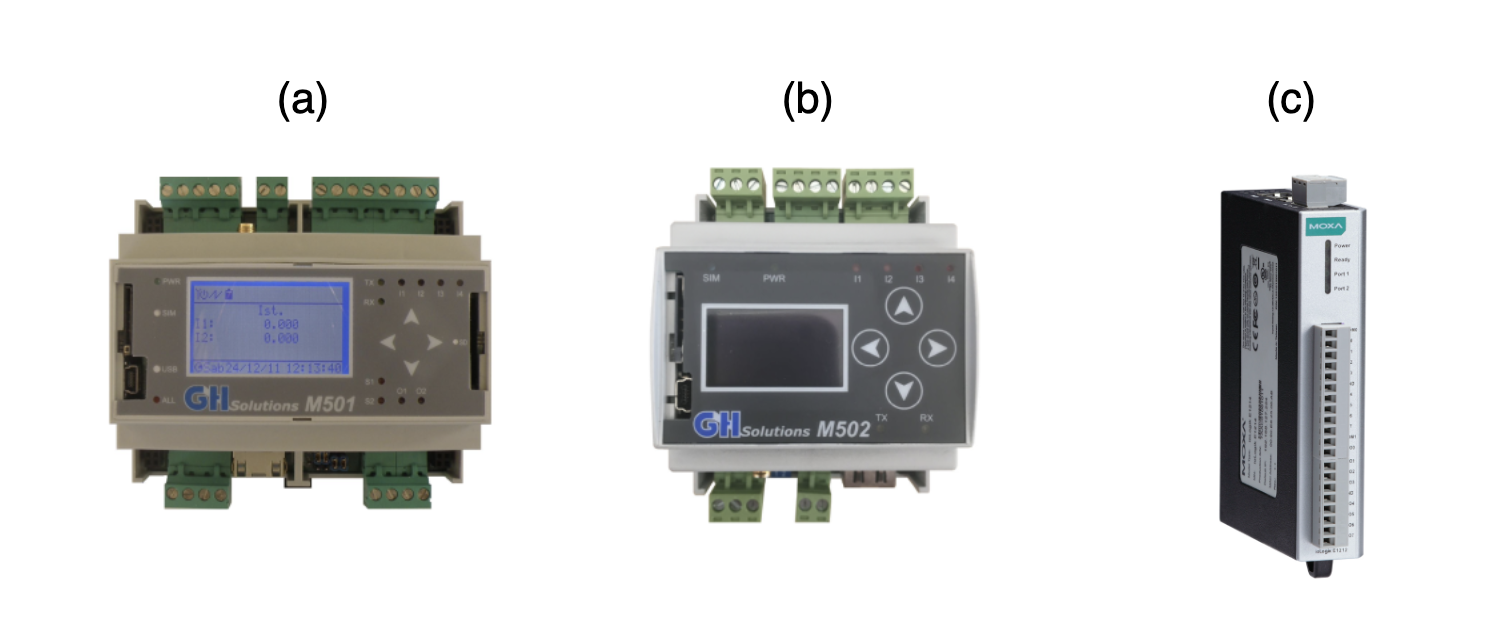

The predominant type of data-logger installed is from the M500 series developed by GH Solutions [@ghsolutions], including versions M501, and M502 (reported in Figure~\ref{fig:dataloggers_images}). These devices, represented with M5 serial number in Figure~\ref{fig:datalogger-installation-timeline}, convert raw impulses from external sensors' pulse emitters into physical quantities and send the processed data to a time series database. Configuring the acquisition channels (up to 64) of such devices involves physically wiring the data logger to the sensors and manually configure the acquisition channels to define the parameters and units of measure, transforming electrical inputs into reasonable values of the monitored variable [@ghsolutions]. This configuration has been performed by operation and maintenance staff during installation and can be modified anytime through terminal commands (see [@ghsolutions] for further details). These devices are connected to the PoliTo internal LAN and are configured to process input raw data into a proprietary text file format, which is periodically sent to an FTP server. The data stored on the FTP server undergo further custom processing in streaming to convert it into the proper format for database storage. Another type of physical data logger installed is the MOXA series [@moxa], represented with TAG serial number in Figure~\ref{fig:datalogger-installation-timeline}, which is specifically used for monitoring AHU. This installation has been carried out by a research group studying the operational parameters of HVAC systems serving some classrooms of the main campus. In this case, the data conversion between the raw value and measured quantity is performed by the proprietary software itself, and the gateway exposes a set of APIs, which are exploited by a processor that was custom made to transform the data into the proper structure for database storage.

All the described data-loggers rely on different sort of communication protocols to acquire or send data to the final consumer. The available communication protocols are briefly described as follows:

-

Modbus is a serial communication protocol originally developed for communication with PLC [@modbus_overview]. It is a simple and widely supported protocol, making it a popular choice for industrial automation, smart grid systems, and other applications where reliable and secure communication is required. Modbus uses a straightforward request-response mechanism, where a controller device sends a request to a field device, and the field device responds with the requested data. The controller device can be a computer, a PLC, or any device capable of initiating communication over a serial or Ethernet connection. The field devices can be sensors, actuators, or any devices capable of responding to requests from the controller device.

-

MQTT is a lightweight, publish-subscribe network protocol well-suited for use in IoT and M2M communication [@mqtt_standard]. The protocol is open and standardized, and it is widely used in a variety of applications, including home automation, industrial automation, and remote monitoring. It is a simple and efficient messaging protocol that enables devices to communicate with each other over a network, with a focus on minimizing network bandwidth and device resource requirements. MQTT clients communicate by publishing and subscribing to topics, where a broker manages the message routing. This publish-subscribe model allows for asynchronous communication, making MQTT ideal for devices with limited resources or unreliable networks.

-

OPC-UA (Open Platform Communications Unified Architecture) is a platform-independent, service-oriented architecture for industrial communication [@opcua_overview]. OPC-UA provides a robust and secure communication protocol for industrial automation systems and devices. It supports complex data structures and provides built-in security features, including encryption and authentication. OPC-UA is designed to be scalable and flexible, supporting a wide range of communication patterns and data models. It is widely used in industrial automation, smart manufacturing, and other applications requiring reliable and secure data exchange. The proper choice of the communication protocol for data processing and exploitation strongly depends on the acquisition hardware, the reliability of the data exchange (e.g., internet connection), and the actual purposes of data consolidation and acquisition. Trade offs must be taken into account and a proper definition of the objective of the data acquisition strategy must be set in advance, then the proper choice of communication protocol can be performed.

In the analyzed infrastructure, the principles for data acquisition were set as follows: (i) high reliability of the monitored data and (ii) consolidation of the monitored data and (iii) possibility to retrieve historical data with high sampling frequency but possibility to down sample.

The primary objective was to robustly acquire data without loss of communication rather than acquiring and ingesting a high volume of data with a trade-off on accuracy. This is the reason why FTP data communication was preferred over Modbus or MQTT protocols.

The reason behind this choice is that the data-loggers installed have the capability to store FTP files internally in the device memory for a period of up to 3 months (depending on file size). In contrast, Modbus or MQTT protocols, although more modern and efficient, may lead to data loss in case of internet connection failure. This choice was proven to be strategic in several occasions when data-loggers were disconnected from the internet for a period. Such incidents were solved without data loss because FTP files were requested on demand and sent to the FTP server as processed.

Despite some devices not supporting this approach, it was decided to perform more frequent sampling of data to reconstruct data if necessary.

Processors

Processors are software processes intended to manipulate data to transform raw electrical impulses into the final data format to be stored in the database.

As described in the data acquisition hardware section, the installed data-loggers already perform the conversion from the raw input value to the appropriate value of the monitored variable. Some data-loggers, like the MX500 series, allow analysts to explicitly modify the parameters for conversion. More modern data-loggers, such as the MOXA series, enable users to not only set up the conversion but also correct for sensor drift.

Other gateways, like proprietary equipment, do not allow external users to directly modify these parameters and often do not expose data at all.

In the context of this thesis and the case study, more than 90% of the volume of data are transferred through FTP protocol. The data-loggers create a text file every 5 mins, containing not only the values acquired by each channel but also the data-logger configuration, and such files are sent to a FTP server on which such files are stored.

Then a custom developed processor parses the text file, extracts sensors configuration and measurements, manipulates sensor values and transforms them into the proper format for database storage.