IT infrastructure

This section describes the IT infrastructure on which the BOS was developed. The setup outlined below is the result of careful consideration of existing constraints and challenges, along with a deliberate restructuring of legacy services that have been in use for years to perform monitoring tasks. Before starting to analyze the underlying IT architecture it is necessary to introduce two concepts: virtualization and containerization, represented in the following Figure.

- Virtualization is a process of creating an abstraction layer over hardware, allowing a single computer to be divided into multiple Virtual Machines (VMs). Each of those virtual computers (known as guests) uses part of the hardware resources of the main computer (known as a host). Each VM can then interact independently and run different operating systems or applications while sharing the resources of a single computer. This process is enabled by the Hypervisor software which takes physical resources and divides them up so that virtual environments can use them. The main feature of virtualization is that it lets you run different operating systems on the same hardware. Each virtual machine’s operating system (guest OS) does all the necessary start-up activities such as bootstrapping, loading the kernel, and so on. However, each guest OS is controlled through elevated security measures so that they do not acquire full access to the underlying OS files and permissions.

- Containerization is a lightweight alternative to virtualization. Each container is an executable package of software that runs on top of a host OS. A host can support many containers concurrently. For example, in a microservice architecture environment, this setup works as all containers run on the minimal, resource-isolated process that others can’t access. Thus, instead of installing an OS for each virtual machine, containers use the host OS.

The state of the art of the monitoring infrastructure as for 2021 when the project started relied on a single server hosted on a VM where both the database and the processing process were hosted. The technological stack consisted in a MySQL server and processing files written in pearl programming language. This setup was studied and deployed since 2014 and executed up to 2021. However, this approach faced limitations as legacy processes running on the same machine became a bottleneck for further improvements, leading to scalability issues. Additionally, due to OS dependency, upgrading the OS and scripts was impossible, hindering process interconnection and optimization. The overall monitoring infrastructure was not scalable since the interdependency of such tasks and need for isolation was not guaranteed. Moreover, the high latency in parsing and aggregating data and absence of knowledge and observability on the deployed processes led the path to the proposal of a new layout of the monitoring infrastructure. With the aim of overcoming such problems, optimizing resources allocations the containerization approach was employed. As a result, the following improvements were achieved:

- Enhanced Resource Efficiency: Containerization enabled multiple isolated applications to run on the same host, sharing the host's kernel and system resources. This efficiency allowed for a greater number of applications per host, reducing the need for additional hardware and thereby lowering operational costs.

- Improved Scalability: Container-based architectures provided inherent scalability, allowing for the seamless addition or removal of containers to accommodate varying demands. This flexibility enabled rapid scaling of applications without additional hardware or extensive manual configuration.

- Increased Portability: Containers offered significant portability, facilitating smooth transitions between environments, such as from development to production. This consistency ensured that applications performed reliably across diverse deployment settings.

- Strengthened Security: The isolation provided by containers enhanced application security, reducing the risk of vulnerabilities and simplifying security management across the infrastructure.

- Streamlined Deployment and Management: Containerization enabled greater automation in deployment and management processes, significantly reducing the time and resources needed for system upkeep.

In order to be consistent with the organization of this thesis and maintain the duality between the development and deployment concepts introduced earlier, the following sections will introduce from a technical point of view how the services, intended as software services, are developed and deployed.

Services development

The development of service in the IT infrastructure has been followed by using a container-based approach relying on Docker containers. Docker is widely used in a variety of environments, including on-premises servers, cloud platforms, and hybrid environments. It is a popular choice for building modern, microservice-based architectures and is supported by a large and active community of users and developers. Docker is an open-source containerization platform that allows you to build, package, and deploy applications as lightweight, portable containers. It was first released in 2013 and has since become a popular choice for building and deploying containerized applications in a variety of environments. Docker uses images to package and deploy applications. Images are templates that define the contents of a container, including the application code, libraries, dependencies, and runtime. This approach not only allows to design portable applications that can be easily moved between different environments, but also allows to isolate containers from each other and from the host operating system, making it easy to run multiple applications on the same host without interference. Furthermore, Docker allows to set resource constraints on containers, such as CPU and memory limits, to ensure that they do not consume too many resources and have the resources needed for the given task.

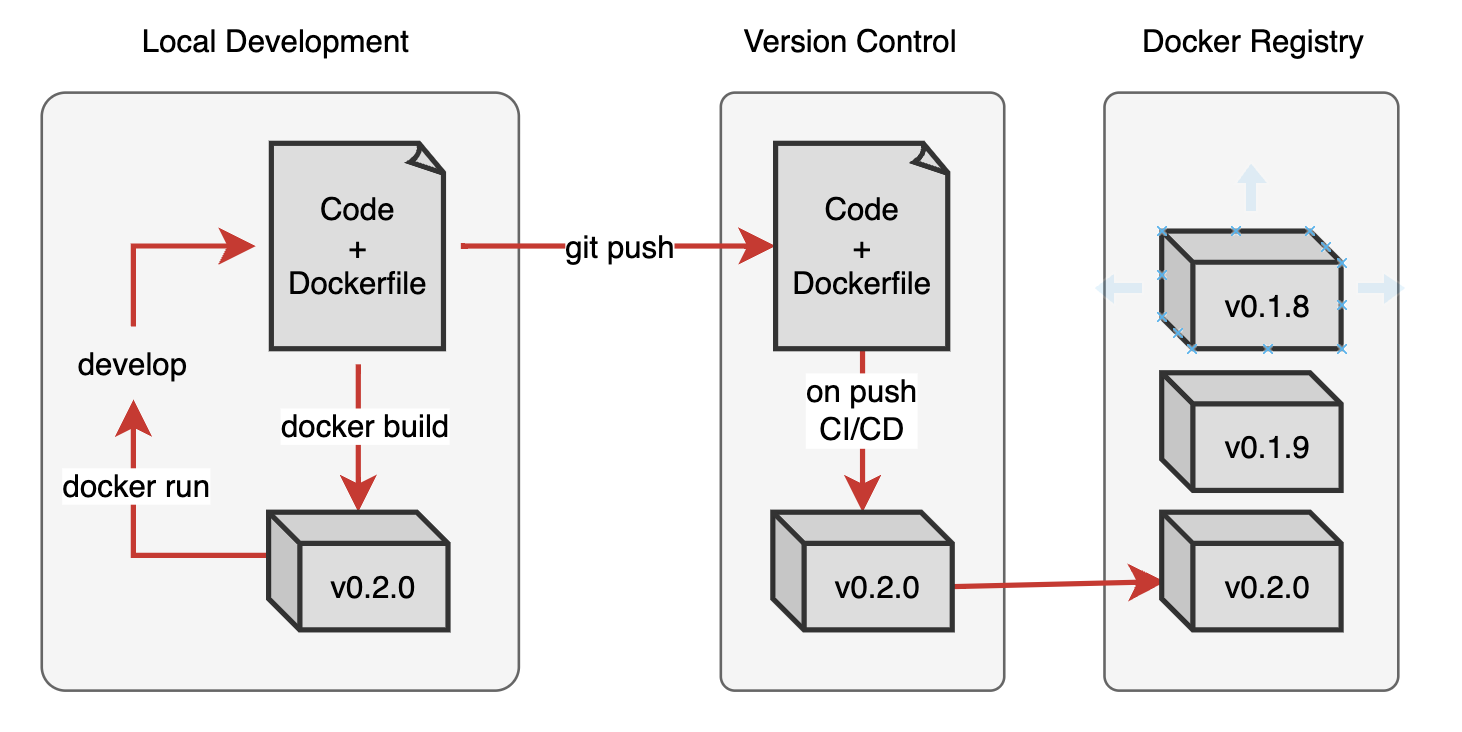

The development process follows the schema reported in the Figure above. An application (for example a Python data parser) is firstly developed locally on the developer machine and OS. Then the second step is to develop locally but executing the code within a Docker image to ensure that the app is running properly. This allows to wrap the code within a reproducible environment that will be deployed as it is in the cloud. Then the code is pushed on version control (in the specific case GitHub) and a CI/CD is triggered to build the image and push it to a private Docker repository. The built images are then tagged using semantic versioning standard and stored in the repository and made available to the part of the infrastructure that deals with the service provisioning.

Services provisioning

Containers provide an efficient way to package and execute applications, but managing them in a production setting requires careful oversight to prevent downtime. In production, if a container fails, another one must be initiated to maintain continuous availability. Although Docker is a robust tool for developing and deploying containerized applications locally, it lacks key features for managing and scaling containers in production. Docker can run multiple containers on a single host but does not inherently support essential capabilities like automatic scaling, restarting failed containers, or reallocating containers to a new host if one becomes unavailable. These functions are handled by orchestrators, specialized tools for managing containerized environments.

Kubernetes, commonly referred to as "K8s", is an open-source container orchestration platform that automates deployment, scaling, and management of containerized applications. Originally developed by Google and now maintained by the Cloud Native Computing Foundation (CNCF), Kubernetes provides a platform-independent way to manage and scale containers across clusters of hosts. It leverages a control plane and APIs to handle container scheduling and distribution, offering features such as automatic restarting of failed containers, rescheduling on alternate hosts if needed, and distributing traffic across container replicas to maintain balanced load. Kubernetes is widely used across various environments, including on-premises servers, cloud platforms, and hybrid systems, making it a favored solution for building modern, microservices-based architectures. Supported by a large community, Kubernetes effectively complements Docker, offering a complete framework for managing and scaling containerized applications at scale. This orchestration helps overcome some of Docker's production limitations, facilitating the development and maintenance of large, resilient applications.

In the case study presented in this thesis, the services are deployed using a Kubernetes infrastructure managed internally by the campus IT department. The resource configurations for these services were specified through configuration files, utilizing Go templates and Helm charts to streamline and standardize the deployment process.